“Having disagreements about your design doesn’t mean that you need to change course, but it gives you information you wouldn’t have had otherwise.”

The Staff Engineer’s Path

In today’s fast-paced digital world, where agility and scalability are crucial, businesses are constantly seeking ways to improve the performance and maintainability of their web applications. One popular approach to achieving these goals is migrating from a monolithic architecture to a distributed one (or micro-frontend). This article series, “Micro-frontend Migration Journey,” shares my personal experience of undertaking such a migration during my time at AWS.

DISCLAIMER: Before we begin, it’s important to note that while this article shares my personal experience, I am not able to disclose any proprietary or internal details of tools, technologies, or specific processes at AWS or any other organization. I am committed to respecting legal obligations and ensuring that this article focuses solely on the general concepts and strategies involved in the micro-frontend migration journey. The purpose is to provide insights and lessons learned that can be applicable in a broader context, without divulging any confidential information.

Motivation for Migration

I learned about micro-frontends (I guess as many of you) from the article on Martin Fowler’s blog. It presented different ways of composing micro-frontend architecture in a framework-agnostic manner. As I delved deeper into the subject, I realized that our existing monolithic architecture was becoming a significant bottleneck for our team’s productivity and impeding the overall performance of our application.

One of the key factors that pushed me towards considering a migration was the increasing bundle size of our application. After conducting a thorough bundle analysis in the summer of 2020, I discovered that since its initial launch in early 2019, the bundle size (gzipped) had grown from 450KB to 800KB (it is almost 4MB parsed) —almost twice the original size. Considering the success of our service and predicting its continued growth, it was clear that this trend would persist, further impacting the performance and maintainability of our application.

While I was enthusiastic about the concept of micro-frontends, I also recognized that we were not yet ready to adopt them due to specific challenges we faced:

- Small Organizational Structure: At the time of my analysis, our organization was relatively small, and I was the only full-time frontend engineer on the team. Migrating to a micro-frontend architecture required a significant investment in terms of organizational structure and operational foundation. It was crucial to have a mature structure that could effectively handle the distributed architecture and reflect the dependencies between different frontend components.

- Limited Business Domain: Although micro-frontends can be split based on bounded contexts and business capabilities (learn more in the “Domain-Driven Design in micro-frontend architecture” post) our core business domain was not extensive enough to justify a complete decoupling into multiple micro-frontends. However, there were visible boundaries within the application that made sense to carve out and transition to a distributed architecture.

Considering these factors, I realized that a gradual approach was necessary. Rather than a complete migration to micro-frontends, I aimed to identify specific areas within our application that could benefit from a distributed architecture. This would allow us to address performance and scalability concerns without disrupting the overall organizational structure or compromising the integrity of our business domain. It also would give us some time to grow the team and observe business directions.

Please note that if you want to tackle app’s performance (bundle size) problem only via using mciro-frontend architecture, it might be not the best idea. It would be better to start with distributed monolith architecture that will leverage lazy loading (dynamic imports) instead. Moreover, I think it would handle bundle size issue more gracefully than micro-frontend architecture considering that micro-frontend architecture is very likely to have some shared code that would not be separated into vendor chunks and it would be built into the application bundle (that’s one of the cons of such distributed architecture – you need to have a trade-off between what to share, when and how). However, distributed monolith architecture will not scale as well as micro-frontend. When your organization grows fast, your team will likely grow at the same pace too. There would be an essential need to split the code base into different areas of ownership controlled by different teams. And each team will need to have their own release cycles that are independent of others, each team will appreciate if their code base would be focused purely on their domain, and will build fast (code isolation -> better maintainability/less code to maintain and build -> better testability/less test to maintain and execute).

The Start

To garner support from leadership, I crafted a persuasive technical vision document that encompassed a comprehensive performance analysis, including web vital metrics, and outlined the various phases of the migration towards distributed frontends. One of the intermediate phases of this migration was to establish a distributed monolith architecture, where multiple modules/widgets could be delivered asynchronously via lazy-loading techniques while leveraging shared infrastructure, such as an S3 bucket and CDN, between the core service and the widgets. As I outlined in my previous article, the main idea of this type of document is to describe the future as you’d like it to be once the objectives have been achieved and the biggest problems are solved. It’s not about the execution plan!

Almost 1 year later, the time had finally come to put my micro-frontend migration plan into action. With the impending expansion into a new domain and a larger team at our disposal, we were well-equipped to execute the migration. It felt like a golden opportunity that we couldn’t afford to miss. After all, remaining confined to the monolithic architecture would mean perpetually grappling with its limitations. The limited timeline to expand into a new domain served as a catalyst, propelling us toward building a more scalable and maintainable architecture right away instead of having short and slow iterations!

To execute the migration and simultaneously handle the work in the new domain, we divided the teams into two dedicated groups. The feature work, which had higher priority, required more resources and needed to iterate at a faster pace. To ensure the integrity and comprehensive understanding of the migration process, it made sense to assign a small dedicated team specifically responsible for handling the migration. However, we couldn’t proceed with the feature work without first ensuring that the micro-frontend concept would prove successful.

To mitigate risks and provide a clear roadmap, it was crucial to create a low-level design document that included precise estimates and a thorough risk assessment. This document served as a blueprint, outlining the necessary steps and considerations for the migration. The pivotal milestone in this process was the development of a proof-of-concept that would demonstrate the successful integration of all components according to the design. This milestone, aptly named the “Point of no return,” aimed to validate the feasibility and effectiveness of the micro-frontend architecture. While I was optimistic about the success of the migration, it was essential to prepare for contingencies. Consequently, I devised a Plan B, which acted as a backup strategy in case the initial concept didn’t yield the desired results. This included allocating an additional seven days in the estimates specifically to have me crying into the pillow plus a few days to have a new feature module entry connected to the core via lazy-loading (remember distributed monolith?).

The Design

When designing micro-frontends, there are generally 3 approaches for composition, each focusing on where the runtime app resolution takes place. The beauty of these approaches is that they are not mutually exclusive and can be combined as needed.

Server-side composition

The basic idea is to leverage reverse proxy server to split micro-frontend bundles per page and do a hard page reload based on the route URL.

Pros:

- Simple to implement

Cons:

- Global state won’t be synced between the micro-frontend apps. This was a clear no-go point for us because we had long-running background operations performed on the client side. You might argue that we could persist snapshot of this operations “queue” to the local storage and read from it after hard-reload but due to security reasons, we were not able to implement this. This is just one example of global state but here is other example of how it can look like: state of the sidenav panels (expanded/collapsed), toast messages etc.

- The hard refresh when navigating across micro-apps is not very customer friendly. There is a way to cache shared HTML using service workers but it’s additional complexity to maintain.

- Additional operational and maintainance costs for the infrastructure: proxy server for each micro-frontend app (this can be avoided if read from the CDN directly), separate infrastructure to deploy common (vendor) dependencies to be re-used by multiple pages and properly cached by browsers.

Edge-side composition

Another approach to micro-frontend composition is edge-side composition, which involves combining micro-frontends at the edge layer, such as a CDN. For instance, Amazon CloudFront supports Lambda@Edge integration, enabling the use of a shared CDN to read and serve the micro-frontend content.

Pros:

- Fewer infrastructure pieces to maintain: no need to have proxy servers, separate CDNs for each micro-app

- Virtually infinite scaling using serverless technology

- Better latency compared to standalone proxy servers

Cons:

- Cold start time might become an issue

- Lambda@Edge is not supported in all AWS regions if you need to have multi-region (isolated) infrastructure

Client-side composition

Client-side composition is another approach to micro-frontend architecture that utilizes client-side micro-frontend orchestration techniques, decoupled from the server implementation.

The key player in this architecture is a container (shell) application that has the following responsibilities:

- Addressing cross-cutting concerns: The container application handles centralized app layout, site navigation, footer, and help panel. Integration with micro-frontends that have cross-cutting concerns occurs through an Event Bus, where synthetic events are sent and handled within the global window scope.

- Orchestration of micro-frontends: The container app determines which micro-frontend bundle to load and when, based on the application’s requirements and user interactions.

- Composing global dependencies: The container app composes all global dependencies, such as React, SDKs, and UI libraries, and exposes them as a separate bundle (

vendor.js) that can be shared among the micro-frontends.

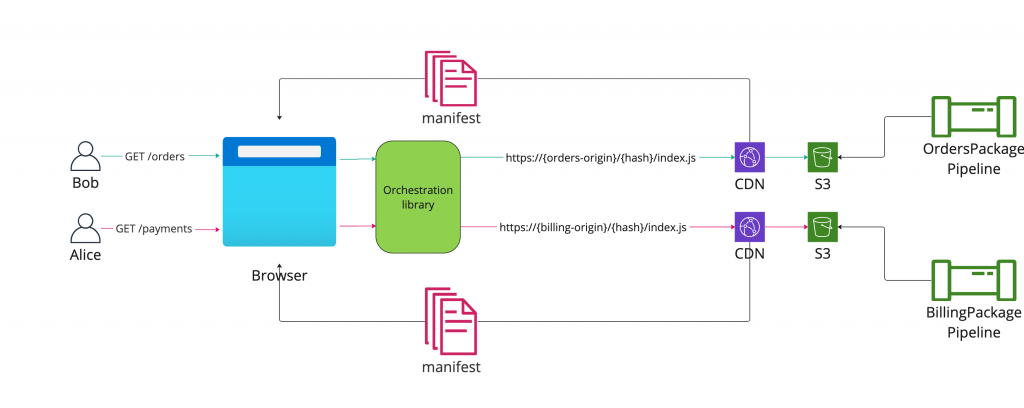

The general idea is each micro-frontend bundle would produce 2 types of assets files:

{hash}/index.js: This serves as the entry point for the micro-frontend application, with the hash representing a unique identifier for the entire build. The hash acts as a prefix key for each bundle in the S3 bucket. It’s important to note that multiple entry points might exist, but the hash remains the same for all of them.

manifest.json: This is a manifest file that contains paths to all entry points for the micro-frontend application. This file would always leave in the root of the S3 bucket, so the container would be able to discover it easily. I recommend turning on versioning of this file in the S3 bucket in order to have better observability of changes. If you are using webpack to build your project, I highly recommend WebpackManifestPlugin that does all the heavy-lifting for you.

The container is only aware of the micro-frontend asset source domain URL (CDN origin) based on the stage and region. During the initial page load, the container downloads the manifest file for each micro-frontend application. The manifest file is tiny in size (~100 bytes) to avoid impacting page load time and scales well even when aggregating multiple micro-frontends within one container. It’s crucial to consider the manifest file as immutable in the browser’s cache storage to prevent aggressive caching.

Choosing the right orchestration library is the biggest challenge in this composition and will be discussed in the following chapter.

Pros:

- Agnostic to server implementation: This approach can be implemented without any specific server requirements, offering flexibility in the backend technology used. As shown in the picture above, you can even don’t have any server

- Preserving global state: By using a container (shell) app, global state can be maintained when switching between micro-frontends. This ensures a seamless user experience and avoids losing context during transitions.

- Decentralized approach: Each micro-frontend can independently decide what data to ship to the browser to bootstrap itself. The container app simply follows a well-defined contract, allowing for greater autonomy and modularity.

- Simple local setup: Assets sources can be easily adjusted between production and local URLs based on development needs. The manifest file helps the container app discover and load the required micro-frontends. Developers can focus on running only the container and the specific micro-frontends they are working on.

Cons:

- More network hops to fetch the manifest file: As the container needs to retrieve the manifest file for each micro-frontend, there may be additional network requests and potential latency compared to other composition approaches. This can be mitigated by loading all manifest upfront on the initial page load or by introducing some preloading techniques.

- Compliance with common contract: Every micro-frontend needs to adhere to a common contract for producing builds. This can be facilitated through shared configurations and standardized development practices to ensure consistency across the micro-frontends (more about this in the following parts).

Hybrid composition

As I mentioned earlier in this chapter, all of these composition patterns can be mixed and matched within the same shell application. Here is the example of how it can look like:

Recommendation

I recommend starting with a homogenous approach in the beginning – select a composition pattern that suits you better and start building the infrastructure around it. For us, the client-side composition was the best option but for the future, we considered switching some regions to edge-side orchestration (based on the availability of Lambda@Edge).

Choosing orchestration library

When it comes to implementing client-side composition in a micro-frontend architecture, selecting the right orchestration library is a critical decision. The chosen library will play a crucial role in managing the dynamic loading and coordination of micro-frontends within the container application. Several popular orchestration libraries exist, each with its own strengths and considerations.

Single-spa

Single-spa is a widely adopted orchestration library that provides a flexible and extensible approach to micro-frontend composition. It allows developers to create a shell application that orchestrates the loading and unloading of multiple micro-frontends. Single-SPA provides fine-grained control over lifecycle events and supports different frameworks and technologies.

Pros:

- Framework agnostic: Library works well with various frontend frameworks like React, Angular, Vue.js, and more.

- Flexible configuration: It offers powerful configuration options for routing, lazy-loading, and shared dependencies.

- Robust ecosystem: Single-SPA has an active community and a rich ecosystem of plugins and extensions.

Cons:

- Learning curve: Getting started with single-spa may require some initial learning and understanding of its concepts and APIs.

- Customization complexity: As the micro-frontend architecture grows in complexity, configuring and managing the orchestration can become challenging.

Qiankun

Qiankun is a powerful orchestration library developed by the Ant Financial (Alibaba) team. It uses a partial HTML approach for composition. On the micro-frontend app side, it produces a plain HTML snippet with all entrypoints to be loaded. After consuming this HTML file, the container does all the orchestration and mounts the app. In this configuration, partial HTML plays the role of a manifest file that I talked about in the previous chapter.

Pros:

- Framework agnostic: Qiankun supports various frontend frameworks, including React, Vue.js, Angular, and more.

- Simplified integration: Qiankun provides a set of easy-to-use APIs and tools for creating and managing micro-frontends.

- Scalability and performance: Qiankun offers efficient mechanisms for code sandboxing, state isolation, and communication between micro-frontends.

Cons:

- Dependency conflicts: Managing shared dependencies and ensuring compatibility across micro-frontends may require careful configuration and consideration.

- Learning curve: While Qiankun provides extensive documentation, adopting a new library may involve a learning curve for your development team.

- Redundant data sent over the wire: The partial HTML snippet contains redundant data (body, meta, DOCTYPE tags) that needs to be sent via the network.

Module federation

Module Federation, a feature provided by Webpack, has gained significant attention and hype in the web development community. This technology allows developers to share code between multiple applications at runtime, making it an attractive option for building micro-frontends. With its seamless integration with Webpack and runtime flexibility, Module Federation has become a popular choice for managing and orchestrating micro-frontends.

Pros:

- Seamless integration with Webpack: If you are already using Webpack as your build tool, leveraging Module Federation simplifies the setup and integration process.

- Runtime flexibility: Module Federation enables dynamic loading and sharing of dependencies, providing flexibility in managing micro-frontends.

Cons:

- Limited framework support: While Module Federation is compatible with multiple frontend frameworks, it may require additional configuration or workarounds for specific use cases.

- Community support: Module Federation is a relatively new technology, released as a core plugin in Webpack 5 (and later back-ported to v4). The Next.js library is also newer, being released as open source recently. As with all new tools, there may be a smaller community and less support available. It’s important to consider this factor if you have tight deadlines or anticipate encountering questions without readily available answers.

Conclusion

In this first part of the “Micro-frontend Migration Journey” series, we have discussed the motivation behind migrating from a web monolith to a distributed architecture and the initial steps taken to sell the idea to the leadership. We explored the importance of a technical vision document that showcased detailed performance analysis and outlined the different phases of the migration.

We then delved into the design considerations for micro-frontends, discussing three approaches: server-side composition, edge-side composition, and client-side composition. Each approach has its pros and cons, and the choice depends on various factors such as synchronization of the global state, customer experience, infrastructure complexity, and caching. Furthermore, we explored popular orchestration libraries, such as single-spa, qiankun and Module Federation, highlighting their features, benefits, and potential challenge

Join me in the next parts of the series as we continue our micro-frontend migration journey, uncovering more interesting and valuable insights along the way!

Related articles:

- DDD in micro-frontends

- Micro-frontend migration journey – Part 2: Toolkit

- Micro-frontend Migration Journey – Part 3: Launch

Discover more from The Same Tech

Subscribe to get the latest posts to your email.

Hi, thank you for sharing your experience!

Can you please provide more intel into the reasoning to adopt microfrontends. It wasn’t quite clear from the post. You mentioned that before adopting microfrontends, there were some issues with the bundle size. Did you use code splitting based on dynamic imports or entries, for example? How microfrontends helped here?

I am asking because, from my personal experience, the pros of microfrontends are maybe not worth the cons. Like the complexity of the setup and the sluggishness of many processes like, the most sensitive part, dev build.

Hi, that’s a very good question! I guess I had an answer to it in my original writing but it somehow lost between the lines after I went through editing 🙂

I considered code splitting as an intermediate phase of migration (I called this phase “Distributed monolith”) – and it would solve the original problem (bundle size) perfectly. Moreover, I think it would handle this issue more gracefully than micro-frontend architecture considering that micro-frontend architecture is very likely to have some shared code that would not be separated into vendor chunks and it would be built into the application bundle (that’s one of the cons of such distributed architecture – you need to have a trade-off between what to share, when and how).

However, distributed monolith architecture will not scale as well as micro-frontend. When your organization grows fast, your team will likely grow at the same pace too. There would be an essential need to split the code base into different areas of ownership controlled by different teams. And each team will need to have their own release cycles that are independent of others, each team will appreciate if their code base would be focused purely on their domain, and will build fast (code isolation -> better maintainability/less code to maintain and build -> better testability/less test to maintain and execute). That was the main motivation behind my decision to migrate to micro-frontend, and it worked out pretty well for us! But I completely agree with you that micro-frontend should be considered as a last resort when optimizing the app’s performance (mostly due to a high level of complexity of this architecture). But if you need to optimize your business efficiency (by properly dividing your organization into smaller and more domain-focused teams), micro-frontend is the one of the best approaches I have seen so far!

Thank you for your feedback! I really appreciate you read my article carefully and came up with such good questions/comments! I will update my article to reflect this better!