“Celebrate shipping things to users, rather than milestones that are only visible to internal teams. You don’t get to celebrate until users are happily using your system. If you’re doing a migration, celebrate that the old thing got turned off, not that the new thing got launched.”

The Staff Engineer’s Path

Welcome to the third installment of the “Micro-frontend Migration Journey” series! In Part 1, we explored the fundamental concepts of micro-frontend architecture, as well as the strategies and high-level design implementations for migrating to a micro-frontend architecture. We discussed how breaking down monolithic applications into smaller, independent micro-frontends enables teams to work autonomously, improves development efficiency, and enhances user experience.

In Part 2, we dived into the implementation details of a micro-frontend architecture, focusing on the Micro-Frontend Toolkit. We discovered a collection of tools and libraries that simplify the development and integration of micro-frontends. The toolkit provided a set of APIs and utilities for dynamic orchestration, streamlined build processes, and shared configurations, empowering developers to create robust and maintainable frontend systems.

Now, in Part 3 of our journey, titled “Micro-frontend Migration Journey – Part 3: Launch,” we will explore the final phase of the migration process: launching the micro-frontend architecture into production. We will delve into the crucial steps, considerations, and best practices for successfully deploying and managing micro-frontends in a live environment.

Whether you are already on a micro-frontend migration journey or considering adopting this architectural approach, this article will provide valuable insights and guidance to help you navigate the launch phase and ensure a smooth transition to a micro-frontend ecosystem.

Let’s embark on this final leg of our journey and uncover the key aspects of launching micro-frontends in a production environment.

The finish line

Before diving into the next steps, let’s go through our checklist:

- System Architecture: Ensure you have decided on the design for your system architecture, including how you will orchestrate your micro-frontends and the library you will use for this purpose, if applicable.

- Development Toolkit: Verify that your development toolkit is working effectively. Make sure you can bootstrap new micro-frontend apps with minimal effort. If you have already prepared an onboarding document to educate developers on this process, it’s fantastic!

- Pre-Prod Deployment: Confirm that you have a working prototype deployed to the Pre-Prod environment. This allows you to test and fine-tune your micro-frontends before moving to the production environment.

Now, it’s time to plan the rollout to the Production environment seamlessly, ensuring a smooth transition for end users. However, we can’t neglect our legacy system – it’s better to have both the legacy system and micro-frontend system deployed and available. We need to have an escape hatch in case of issues, allowing us to redirect users back to a safe place. This requires making some important design decisions.

Migration Strategy

In general, there are two main approaches to implement the migration:

- Complete Rewrite: This involves a complete code freeze of your legacy app. It carries some risk as it may leave product features stale for a period of time. However, once the rewrite is done, it becomes less cumbersome to move forward.

- Strangler Pattern: Prioritize migration for business-critical parts of the legacy app. This approach allows you to provide value incremen, releasing frequent updates and monitoring progress carefully. It minimizes the chances of freezing feature development and reduces the risks of architectural errors. The Strangler Pattern is particularly useful for developers to evaluate the effectiveness of the initial micro-frontend releases and make any necessary adjustments.

If you still need to continue feature work along the architecture migration, it is still achievable but requires a few considerations:

- Keep new micro-frontends modular. Encapsulate business functionality into a separate package, whether it’s in a monorepo or a multi-repo setup. External consumers, such as the micro-frontend shell and legacy system, should only be concerned with integration points. Note that some micro-frontend orchestration libraries require applications to implement special contracts for integration, such as the lifecycle interface of single-spa library. In this case, you might either have these APIs exported along with the main entry component for this app or have 2 different entries produced for different type of integrations. Completing this encapsulation early on will unblock your teammates to start working on features while you (or migration team) focus on integration concerns.

- After encapsulating micro-frontend apps, you need to consume them in the legacy system as build-time dependencies. This can be done via static imports or lazy loading using dynamic imports. Your legacy system must be context-aware – in the micro-frontend ecosystem, it needs to avoid bundling/lazy-loading this dependency.

- Now, you have an important choice to make. What will happen to the legacy app when the migration is complete (assuming you chose the strangler migration approach)? Will it be completely eliminated, or will it become an application container (shell) focused solely on the orchestration and bootstrapping of other apps? In either case, your legacy app needs to produce bundles that work for both ecosystems (monolith vs. micro-frontend). The simplest approach is to have the legacy bundler output two bundles. You can have separate pipelines that invoke different commands for building (e.g.,

buildvs.build:mfe), or you can use a single pipeline if you can tolerate longer build times.

In the end of the day, your traffic routing picture might go through the following transformations:

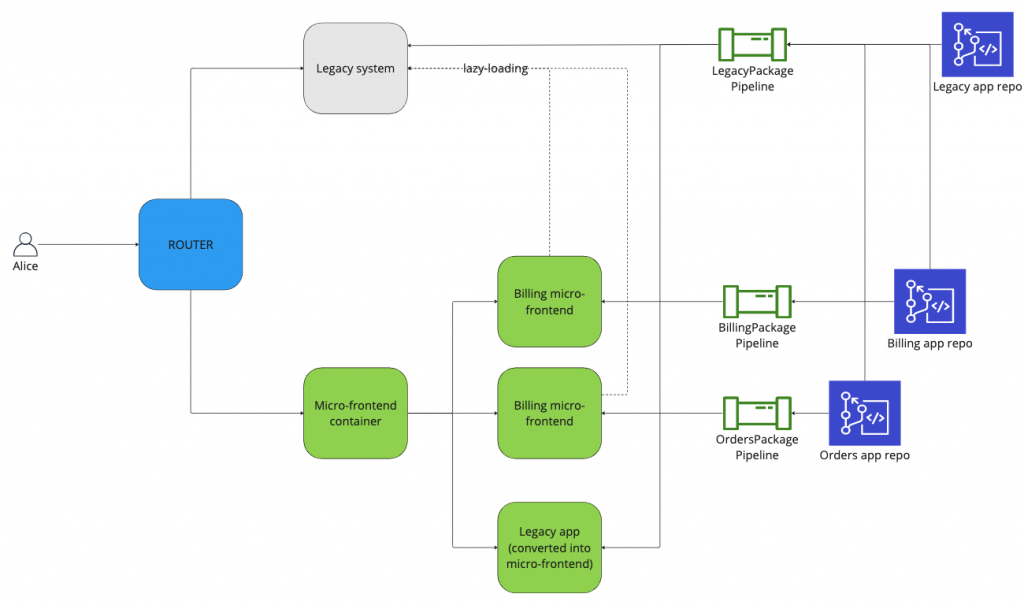

- Intitial state after migration:

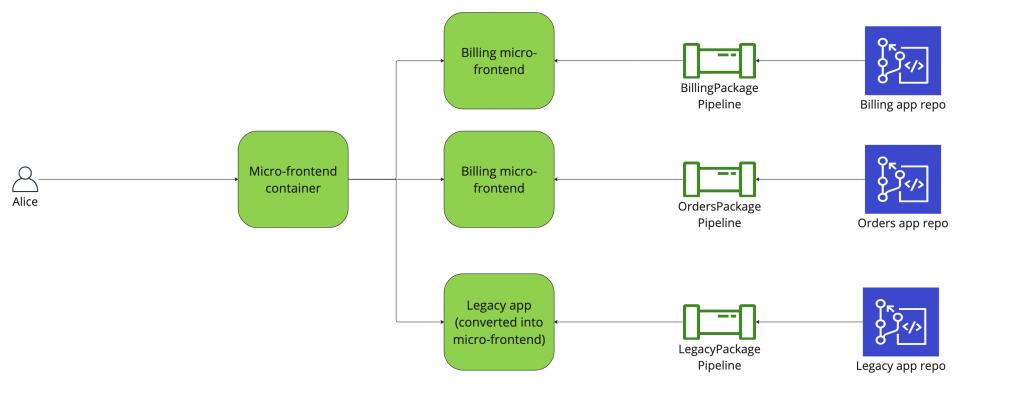

- Team migrates another app into micro-frontend:

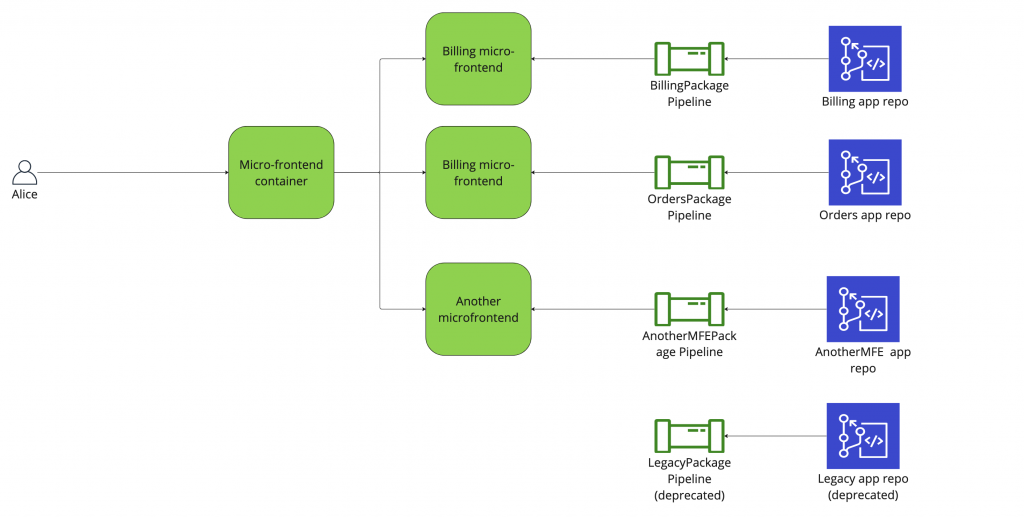

- Team is confident with new architecture (legacy system is deprecated):

- Legacy app has been completely decoupled (strangler migration completed):

By considering these migration strategies, you can make informed decisions on how to proceed with the transition to a micro-frontend architecture in the Production environment. Additionally, you may have noticed the presence of a mysterious router component in our architecture. In the next section, we will dive deeper into the various ways you can implement the router and explore the options available to seamlessly navigate between legacy system and micro-frontends.

Router

As mentioned earlier, it is important to provide users with an “escape hatch” in case of any issues or misconfigurations within the new micro-frontend architecture. This can be achieved through an application-level router that handles redirection to the old system or provides alternative navigation options.

The first question to address is where this router should live:

- On the client side. By utilizing the micro-frontend loader (see Part 2), you can implement a strategy that falls back to the legacy bundle if the metrics of the micro-frontend do not meet service-level objectives (max download latency threshold, max retries to download, etc).

- On the server side. If you have a frontend server, API Gateway, or serverless function in your infrastructure, you can leverage them to handle the routing logic. This enables more centralized control and flexibility in managing the redirection process.

- On the edge. In cases where you employ edge-side composition of your micro-frontends (as explored in Part 1), you can utilize the same infrastructure to handle the routing. This approach allows for efficient and scalable routing at the edge, ensuring optimal performance and minimal latency.

Next, you need to determine the routing strategy for your micro-frontend architecture. Here are some options to consider:

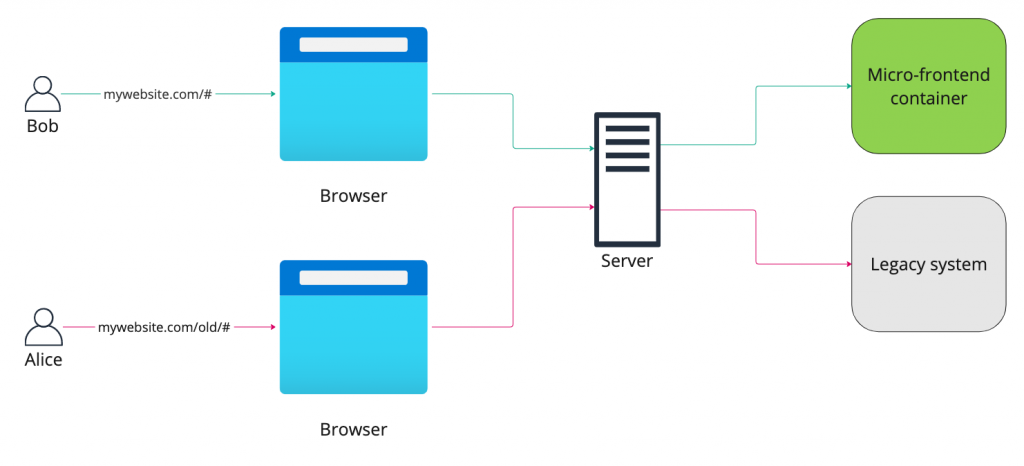

- Direct URL. This is the simplest approach. By default, incoming traffic will be redirected to the page fetching the micro-frontend shell script. You can reserve a special URL path that allows users to access the old legacy system. This pattern is called Multi-Page Architecture (traditional way web developers used prior to SPA era). In the user interface, you can implement a banner or notification to announce the change and provide a link to the old system. Additionally, the UI can offer an option to remember the user’s choice by persisting it in client storage (e.g., local storage) or a backend database. In the diagrams below I will use generalized “Server” word to aggregate all types of server infrastructures (SSR server, API Gateway, serverless, etc).

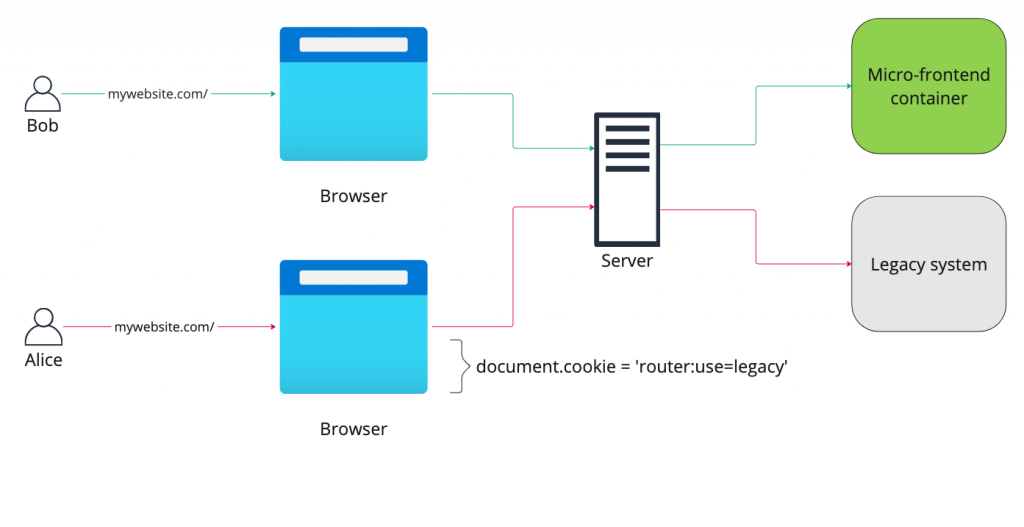

2. Cookies. Cookies have been a longstanding method for persisting data on the client and sharing it with the server. You can utilize this shared knowledge to implement your routing logic. By setting a specific cookie value, you can control the routing behavior of your micro-frontend system. For example, you can use a cookie to store the user’s preference for accessing the new system or the old legacy system. Based on the cookie value, you can redirect the user accordingly and ensure a seamless transition between the two systems.

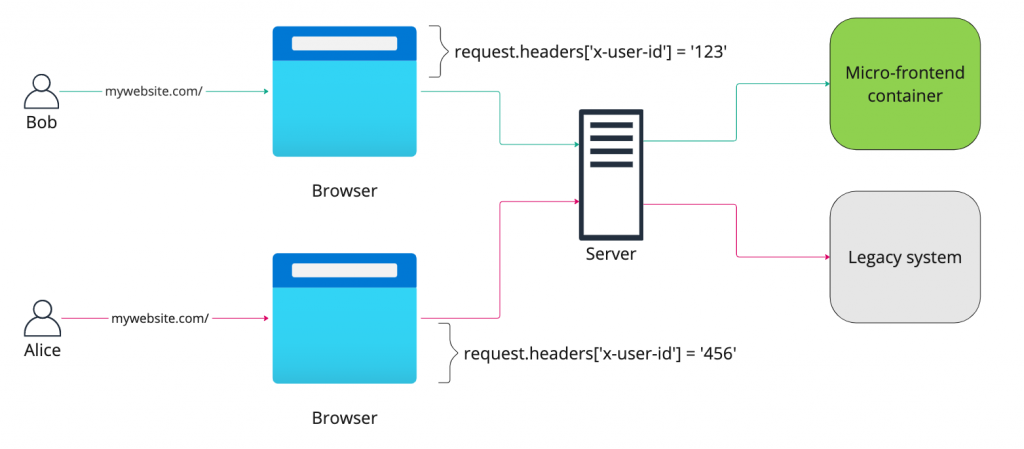

3. Traffic Splitting. This approach is similar to A/B testing. You can provide access to the new micro-frontend system only to a fraction of users. By using the user’s ID or IP address as a distinguishing factor, you can generate a hash from it. Then, by normalizing the hash value (taking the modulo), if the resulting value is less than a certain threshold (let’s say X, representing the percentage of users you want to grant access to), you can redirect them to the new system. For the remaining users, they will continue to access the legacy system. It’s important to note that this approach doesn’t provide an option for the selected users to revert back to the old system, so it may need to be combined with previous routing strategies to accommodate all user scenarios.

4. Circuit Breaker: The circuit breaker approach is a data-driven strategy that requires centralized metrics collection from clients. These metrics are then used to determine the appropriate routing for future user requests. It is important to be cautious when making on-demand requests to get these metrics, as this can impact page load performance. Instead, consider caching aggregated metrics at regular intervals and updating them in the background. Metrics such as the ratio of failed requests to total requests, average page load time, and infrastructure availability (CDN, blob storage) can be used. While it is possible to implement this approach client-side (using metrics collected on field for each individual client), it can be challenging to make decisions without a holistic view of the entire system.

Is that all?

No! Many people consider deploying the system to be the last step in their launch plan. But this is wrong! Micro-frontend is very dynamic architecture and has a lot of moving parts that needs to be integrated with each other and live in harmony. It is crucial to have observability that will provide us, developers, visibility into how system is functioning in Production (and not only on our local machine!). Collecting metrics from clients allows for a comprehensive view of unhandled and handled errors, stack traces, and web vitals. It is also important to provide isolated metrics views and alarms for each micro-frontend app, enabling the owning team to respond quickly to any issues. Implementing canary End-to-End tests in the production and pre-production environments, simulating user journeys, will help to identify potential integration issues early on.

Indeed, observability is a critical aspect that should be considered early in the process of building a micro-frontend architecture. In a distributed architecture, relying solely on customer feedback to identify and address issues is not sufficient. By implementing observability tools and practices, developers can proactively monitor the system, detect potential issues, and resolve them before customers even notice. Observability allows for real-time visibility into the performance, health, and behavior of the micro-frontends, enabling developers to identify and address issues quickly. By taking a proactive approach to observability, teams can deliver a high-quality user experience and ensure the smooth functioning of the micro-frontend architecture.

Closing notes

In this article series, we have explored the journey of migrating to a micro-frontend architecture. We started by understanding the motivations behind adopting micro-frontends and the benefits they offer in terms of scalability, maintainability, and independent development. We then delved into the implementation details, discussing key concepts, tools, and strategies for successfully transitioning to a micro-frontend architecture.

From designing the system architecture and building the micro-frontend development toolkit to managing the deployment process and implementing effective routing strategies, we have covered important aspects of the migration journey. We also highlighted the significance of observability and the role it plays in ensuring the smooth operation of the micro-frontend architecture.

By embracing micro-frontends and following the best practices outlined in this article series, development teams can create modular, scalable, and resilient frontend systems. Micro-frontends enable teams to work independently, leverage different technologies, and deliver features more rapidly. With careful planning, thoughtful design, and a focus on observability, organizations can successfully navigate the migration to a micro-frontend architecture and unlock the full potential of this powerful approach.

Embrace the future of frontend development with micro-frontends and embark on your own migration journey to build robust, flexible, and user-centric applications.

Happy coding!

Articles in the series:

- Micro-frontend Migration Journey – Part 1: Design

- Micro-frontend Migration Journey – Part 2: Toolkit

Discover more from The Same Tech

Subscribe to get the latest posts sent to your email.

Be First to Comment